Linear Regression Versus logistic Regression

Linear regression and logistic regression are both fundamental techniques in the field of statistics and machine learning. They are used for different types of problems and have distinct characteristics. In this discussion, we will explore the differences between linear regression and logistic regression, accompanied by relevant equations and visual representations.

Linear Regression:

Linear regression is used for predicting a continuous outcome variable (also called dependent variable) based on one or more predictor variables (independent variables). The equation for simple linear regression with one predictor variable is:

Y=β0+β1X+ϵ

Where:

- �Y is the dependent variable.

- �X is the independent variable.

- �0β0 is the intercept.

- �1β1 is the slope.

- �ϵ is the error term.

For multiple linear regression with �n predictor variables:

�=�0+�1�1+�2�2+…+����+�Y=β0+β1X1+β2X2+…+βnXn+ϵ

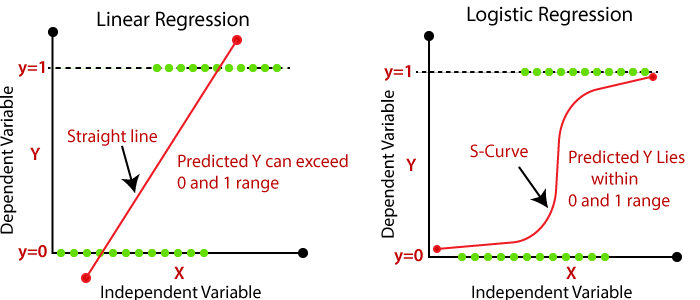

Linear regression aims to find the best-fitting line through the data by minimizing the sum of squared differences between the observed and predicted values.

Logistic Regression:

Logistic regression is used for predicting the probability of an event occurring. It’s commonly used for binary classification problems (two possible outcomes). The logistic regression equation is:

�(�=1)=11+�−(�0+�1�)P(Y=1)=1+e−(β0+β1X)1

Where:

- �(�=1)P(Y=1) is the probability of the event �Y occurring.

- �X is the independent variable.

- �0β0 is the intercept.

- �1β1 is the coefficient for the independent variable.

- �e is the base of the natural logarithm.

The logistic function (sigmoid function) transforms the linear combination of predictors into values between 0 and 1, representing probabilities.

Need Help with Data Analysis & Result Interpratation for your Project, Thesis or Dissertation?

We are Experts in SPSS, EVIEWS, AMOS, STATA, R, and Python

Key Similarities:

Parametric Models: Both linear and logistic regressions are parametric models, meaning they make assumptions about the functional form of the relationship between variables.

Linear Relationship: Both models assume a linear relationship between the independent variable(s) and the dependent variable.

Key Differences:

Dependent Variable:

- Linear Regression: The dependent variable is continuous and can take any real value.

- Logistic Regression: The dependent variable is binary (0 or 1) and represents the probability of an event occurring.

Output Interpretation:

- Linear Regression: The output is the predicted value of the dependent variable.

- Logistic Regression: The output is the probability of the event occurring.

Sigmoid Function:

- Logistic Regression uses the sigmoid function to map predicted values into probabilities between 0 and 1.

Applications:

Linear Regression:

- Predicting house prices based on square footage.

- Estimating sales based on advertising expenditure.

Logistic Regression:

- Predicting whether an email is spam or not.

- Medical diagnosis, like predicting if a patient has a certain disease based on test results.