Exploratory Factor Analysis (EFA) Results Interpretation

Appropriateness of data (adequacy)

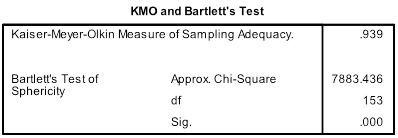

KMO Statistics

- Marvelous: .90s

- Meritorious: .80s

- Middling: .70s

- Mediocre: .60s

- Miserable: .50s

- Unacceptable: <.50

Bartlett’s Test of Sphericity

Tests hypothesis that correlation matrix is an identity matrix.

- Diagonals are ones

- Off-diagonals are zeros

A significant result (Sig. < 0.05) indicates matrix is not an identity matrix; i.e., the variables do relate to one another enough to run a meaningful EFA.

Communalities

A communality is the extent to which an item correlates with all other items. Higher communalities are better. If communalities for a particular variable are low (between 0.0-0.4), then that variable may struggle to load significantly on any factor. In the table below, you should identify low values in the “Extraction” column. Low values indicate candidates for removal after you examine the pattern matrix.

Factor Structure

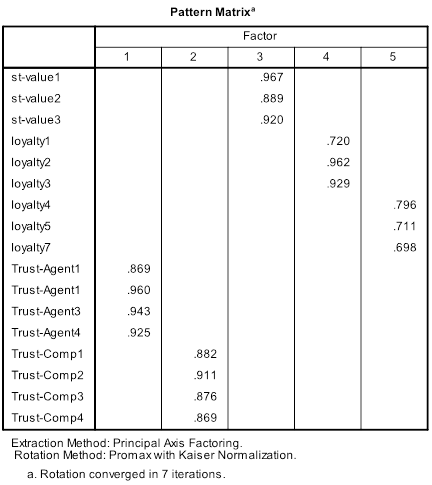

Factor structure refers to the intercorrelations among the variables being tested in the EFA. Using the pattern matrix below as an illustration, we can see that variables group into factors – more precisely, they “load” onto factors. The example below illustrates a very clean factor structure in which convergent and discriminant validity are evident by the high loadings within factors, and no major cross-loadings between factors (i.e., a primary loading should be at least 0.200 larger than secondary loading).

Convergent validity

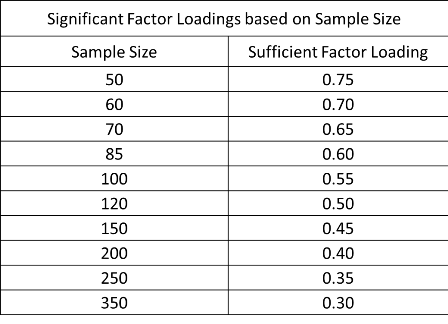

Convergent validity means that the variables within a single factor are highly correlated. This is evident by the factor loadings. Sufficient/significant loadings depend on the sample size of your dataset. The table below outlines the thresholds for sufficient/significant factor loadings. Generally, the smaller the sample size, the higher the required loading. We can see that in the pattern matrix above, we would need a sample size of 60-70 at a minimum to achieve significant loadings for variables loyalty1 and loyalty7. Regardless of sample size, it is best to have loadings greater than 0.500 and averaging out to greater than 0.700 for each factor. The table below is Table 3-2 from page 117 of Hair et al (2010).

Discriminant validity

Discriminant validity refers to the extent to which factors are distinct and uncorrelated. The rule is that variables should relate more strongly to their own factor than to another factor. Two primary methods exist for determining discriminant validity during an EFA. The first method is to examine the pattern matrix. Variables should load significantly only on one factor. If “cross-loadings” do exist (variable loads on multiple factors), then the cross-loadings should differ by more than 0.2. The second method is to examine the factor correlation matrix, as shown below. Correlations between factors should not exceed 0.7. A correlation greater than 0.7 indicates a majority of shared variance (0.7 * 0.7 = 49% shared variance). As we can see from the factor correlation matrix below, factor 2 is too highly correlated with factors 1, 3, and 4.

What if you have discriminant validity problems – for example, the items from two theoretically different factors end up loading on the same extracted factor (instead of on separate factors). I have found the best way to resolve this type of issue is to do a separate EFA with just the items from the offending factors. Work out this smaller EFA (by removing items one at a time that have the worst cross-loadings), then reinsert the remaining items into the full EFA. This will usually resolve the issue. If it doesn’t, then consider whether these two factors are actually just two dimensions or manifestations of some higher order factor. If this is the case, then you might consider doing the EFA for this higher order factor separate from all the items belonging to first order factors. Then during the CFA, make sure to model the higher order factor properly by making a 2nd order latent variable.

Face validity

Face validity is very simple. Do the factors make sense? For example, are variables that are similar in nature loading together on the same factor? If there are exceptions, are they explainable? Factors that demonstrate sufficient face validity should be easy to label. For example, in the pattern matrix above, we could easily label factor 1 “Trust in the Agent” (assuming the variable names are representative of the measure used to collect data for this variable). If all the “Trust” variables in the pattern matrix above loaded onto a single factor, we may have to abstract a bit and call this factor “Trust” rather than “Trust in Agent” and “Trust in Company”.

Reliability

Reliability refers to the consistency of the item-level errors within a single factor. Reliability means just what it sounds like: a “reliable” set of variables will consistently load on the same factor. The way to test reliability in an EFA is to compute Cronbach’s alpha for each factor. Cronbach’s alpha should be above 0.7; although, ceteris paribus, the value will generally increase for factors with more variables, and decrease for factors with fewer variables. Each factor should aim to have at least 3 variables, although 2 variables is sometimes permissible.